Logic behind the machine recognizing digits from the handwritten image

Presenting by Madhan Kumar Selvaraj

As shown in the above gif image, first layer is the input later and the next two layers are the hidden layers and the final one is output layers. As I explained before, the input layer contains 784 neurons and the output layer contains 10 neurons because our image contains values between 0 and 9.

For the dark, blur and empty pixels, the weight value will be positive, negative and zero respectively. The values of the weight are assumed randomly and then values modify by using the backward propagation technique. Weight plays an important role in deep learning and we also assume it as giving some priority to particular data.

If there is no activation function in the deep learning, the output signal will be linear function (one-degree polynomial). The linear function is easy to solve but we can't able to make our model learn by using the different inputs and it will behave like machine learning. It won't be useful for a complex problem like image, audio, video.

Activation function A = “activated” if Y > threshold else not. Alternatively, A = 1 if y> threshold, 0 otherwise.

P.S - Average screen time is around 8 hours though out the world. It's not only makes you weak, also it will make increase distance between you and your family. Spend more time with your family and friends than with the mobile and laptop.

Mobile phones become a third hand to this generation people and in our day-to-day life we are using the artificial intelligence of the system without our knowledge. I hope everyone used the handwriting input features in the mobile keypad. It recognizes the character or digits when you draw.

It may look simple. But the process behind it is very complex and I am using this blog to explain clearly as simple as possible.

ANN consists of three layers and each value is called as neuron

It may look simple. But the process behind it is very complex and I am using this blog to explain clearly as simple as possible.

What this blog is about?

This blog concentrates on the process behind the Artificial Neural Network (ANN) and Convolution Neural Network (CNN). It is simply called deep learning or Artificial Intelligence (AI). For a bonus to my readers, I added the digit recognition project by using OpenCV and CNN.What is deep learning?

The human learns things by seeing or hearing something and they can able to recognizes the things once they see it again. For example, a Child recognizes whether an animal is a cat or dog by their parent's teaching. Like the same, the machine learns by the example and if any new thing came means machine checks with its previous data and then it classifies. Also, it learns by using its new data.Artificial Neural Network

We will take one a good example to know about the concepts much better. Here I am using an image of resolution 28 * 28 where the height and width of the image are equal to 28. The total number of pixels in the image is 784 (multiplying height and width values of the image).ANN consists of three layers and each value is called as neuron

- Input layer

- Hidden layers

- Output layer

Forward propagation

Weight in ANN

Let's assume the image background color of black and drawn number 0 using white color. Each pixel (neuron) has some value, 0 for black, 255 for white and in-between values for remaining colors. Digit 0 looks like a joint of one semi-circle and one inverted semi-circle. So values at the particular portion image of the pixels should be high.For the dark, blur and empty pixels, the weight value will be positive, negative and zero respectively. The values of the weight are assumed randomly and then values modify by using the backward propagation technique. Weight plays an important role in deep learning and we also assume it as giving some priority to particular data.

Role of bias in ANN?

Before knowing the bias we need to know about the activation function. It contains some threshold values and it is assumed based on the values of weight. The activation function determines which neuron should be triggered or activated. But in some cases, the unwanted neurons will be triggered or useful neurons will not be triggered. In that case, we add some values to avoid that problem and that value is called bias.Activation function

As explained before, the activation function decides which should be activated. Each neuron passes through the activation function when it travels from one layer to the next layer.If there is no activation function in the deep learning, the output signal will be linear function (one-degree polynomial). The linear function is easy to solve but we can't able to make our model learn by using the different inputs and it will behave like machine learning. It won't be useful for a complex problem like image, audio, video.

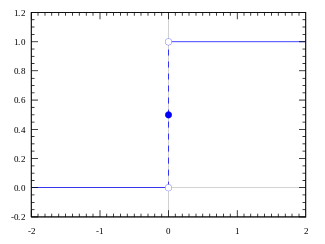

Threshold activation function (Binary step)

Activation function A = “activated” if Y > threshold else not. Alternatively, A = 1 if y> threshold, 0 otherwise.

Sigmoid function (Logistic regression)

The Sigmoid function takes a value as input and outputs another value between 0 and 1. It is non-linear and easy to work with when constructing a neural network model. The good part about this function is that continuously differentiable over different values of z and has a fixed output range.

Hyperbolic tangent function (Tanh)

The Tanh function is a modified or scaled-up version of the sigmoid function. What we saw in Sigmoid was that the value of f(z) is bounded between 0 and 1; however, in the case of Tanh, the values are bounded between -1 and 1.

Rectified Linear Unit Function (ReLU)

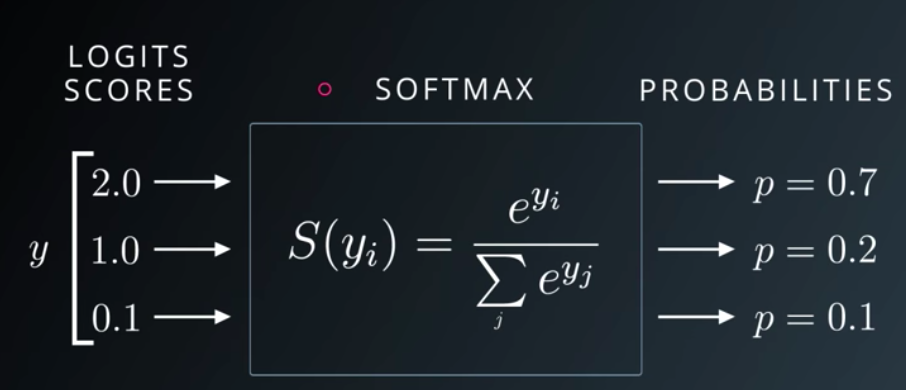

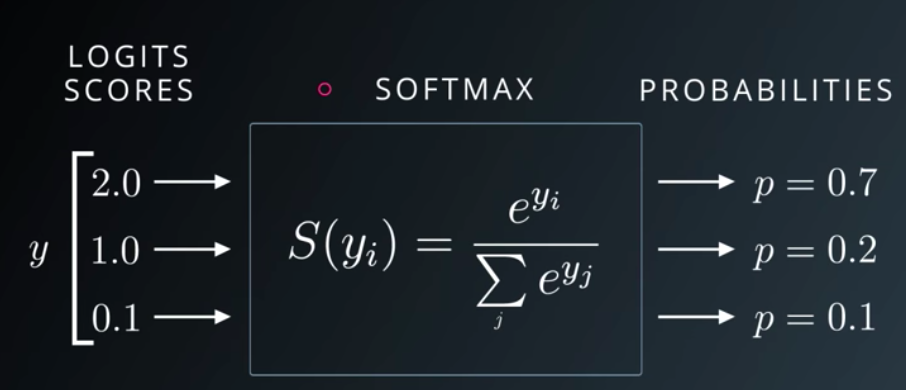

Softmax function

Softmax function, a wonderful activation function that turns numbers aka logits into probabilities that sum to one. Softmax function outputs a vector that represents the probability distributions of a list of potential outcomes.

Cost function

Our machine can't able to find the actual digit from this process because the values of the weight are assumed randomly. To fix the problem we need to find the error value that is difference between the actual value and the predicted value. It is called a function.

There are many methods to find the cost function, here we square the difference value to eliminate the negative value. Our model will classify the images effectively if we find the minimum cost value and it is done by the gradient descent method and it is the powerful method in data science.

Backward propagation

In this process, we will modify the values of weight and bias to each neuron by using the cost function value. This process is done from the output layer to the input layer.

The issue in Artificial Neural Network

In this example we took an image resolution of 28*28 and the total number of pixels is 784 and each hidden layer contains 16 neurons and the output layer contains 8 neurons including bias value for each input neuron. So totally 784*16*16*8+784 = 16,06,416. But in reality, our image size is more than 4000*5000. So the value will be 40,980,000,000. This is for the single process and if we run for the multiple times our system will get crash. Also, think about the data like audio and video.

To avoid this problem, we need to reduce the data size by using the Convolution Neural Network (CNN).

Convolution Neural Network (CNN)

It is used to reduce the size of the data without losing important data. It is done by undergoing a few processes

- Convolution

- Normalization

- Pooling

- Flattering

- ANN

Convolution

Our input data is in the form of a 28*28 matrix. Let's take a matrix of 3*3 and it has some value and it is called filters. Matrix multiplication did between filter value and the input matrix value. The average value of that matrix is stored and a separate matrix will be created. Multiple filters to be created based on the user request and separate filter will be created for each filter. This process helps to avoid the loss of useful information in the data.

Normalization

Some values will be negative after the matrix multiplication. To solve this problem, the Rectified Linear Units (ReLU) function will be used to convert the negative values to zero.

Pooling

Similar to the convolution process, but here there will be no value in the filter matrix. Instead, the filter matrix takes the maximum pixel value from the particular matrix. This process helps to get the border value among the objects in the image.

Convolution and the pooling will be done multiple times and finally, we will get a matrix with a minimum resolution size while comparing to the input resolution size.

Flattering

The output of the data will be in matrix format and it should be converted to the single column.

Artificial Neural Network

The flattering data will be feed to the ANN as an input and the whole process will be carried out. Now the ANN runs faster due to the small size of the input data.

The coding part of ANN

I used the MNIST dataset and it contains a handwritten sample of 42,000 images and I used the OpenCV for the image processing.

Added all the coding part in the Kaggle as well as Github. Here I added a few important things about that project.

Drawing digit in the image using OpenCV

drawing=False # true if mouse is pressed

mode=True

# mouse callback function

def paint_draw(event,former_x,former_y,flags,param):

global current_former_x,current_former_y,drawing, mode

if event==cv2.EVENT_LBUTTONDOWN:

drawing=True

current_former_x,current_former_y=former_x,former_y

elif event==cv2.EVENT_MOUSEMOVE:

if drawing==True:

if mode==True:

cv2.line(image,(current_former_x,current_former_y),(former_x,former_y),(255,255,255),2)

current_former_x = former_x

current_former_y = former_y

elif event==cv2.EVENT_LBUTTONUP:

drawing=False

if mode==True:

cv2.line(image,(current_former_x,current_former_y),(former_x,former_y),(255,255,255),2)

current_former_x = former_x

current_former_y = former_y

return former_x,former_y

# image = np.zeros((512,512,3), np.uint8)

image = np.zeros((140,140,1), np.uint8)

cv2.namedWindow("OpenCV Paint Brush")

cv2.setMouseCallback('OpenCV Paint Brush',paint_draw)

while(1):

cv2.imshow('OpenCV Paint Brush',image)

k=cv2.waitKey(1)& 0xFF

if k==27: #Escape KEY

cv2.imwrite("number.png",image)

break

cv2.destroyAllWindows()

Complete script

Finally, deep learning technique is largely used in all the sector and it helps to solve the problem as soon as possible before the human detect it.P.S - Average screen time is around 8 hours though out the world. It's not only makes you weak, also it will make increase distance between you and your family. Spend more time with your family and friends than with the mobile and laptop.

Comments

Post a Comment